A Dead Simple Way to VLM Parsing

This post demonstrates a straightforward approach to using VLMs for parsing unstructured data from images. While simple, this method can be easily extended to handle more complex scenarios, such as extracting information from PDFs. If you want, you can built a more complex parser as llamaparse with more steps, like OCR, table parsing, etc.

Let's consider a common scenario: you have a collection of images and need to extract text from them. Or perhaps you want to generate descriptions from pictures to enable full-text search capabilities. It's time to hire a VLM (Visual Language Models or MultiModal Models) to work for you.

Since I work extensively with llama_index, I'll be using it in the examples. However, the core concepts remain the same, and you can adapt them to work with any tools or clients of your choice.

Show Me The Code

At first, we need to define a prompt for the VLM to follow, to help it understand what we need.

=

Let's build a minimal but functional image parser:

=

=

"""A simple parser for extracting information from images using VLMs.

Args:

model (MultiModalLLM): The multi-modal language model to use for parsing

"""

"""Convert image to base64 encoding"""

=

=

return

"""Parse image content"""

=

=

= await

return

The core is, we convert the image to base64 encoding, and then pass it to the VLM.

Taking It for a Spin

Here's how to use our shiny new parser:

=

=

=

return

# Initialize the VLM

=

# Create our parser

=

# Let's parse an image!

= await

In this snippet, we initialize the VLM, create our parser, and then parse an image.

Running the Demo

I have a repo for a demo of using VLM to parse images, you can see the code here.

Just run the script with the image path:

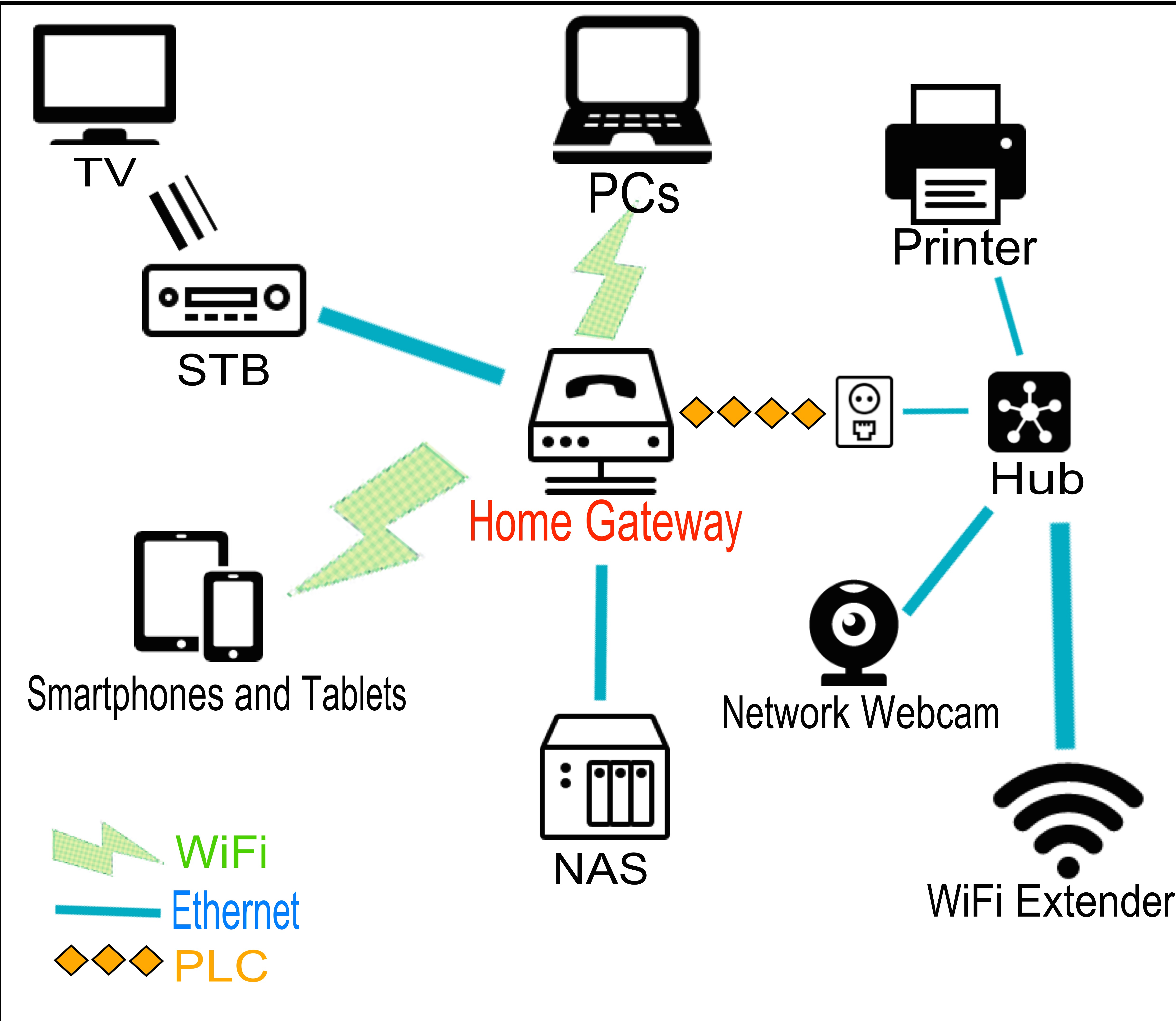

The example image is a screenshot of a home gateway, from Home gateway example:

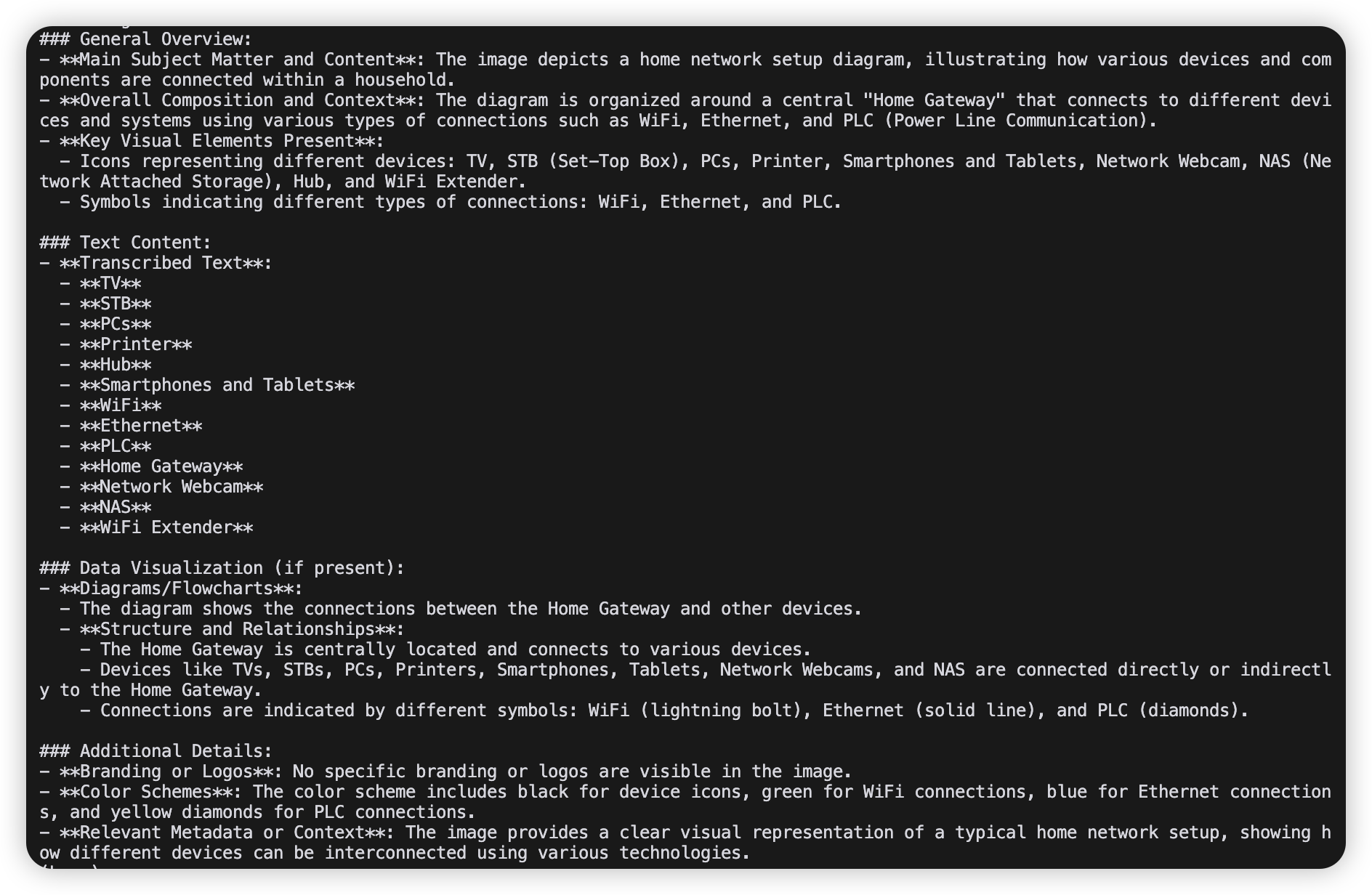

And the result in terminal is:

Bonus: Extending the Parser

Here are some practical ways to extend this simple parser:

-

Format Support

- Handle PDFs using tools like

pdf2image - Process videos using

opencv-pythonfor frame extraction

- Handle PDFs using tools like

-

Output Options

- Generate JSON output:

= await # Add your JSON transformation logic here return - Create custom templates using Jinja2 or similar

- Generate JSON output:

-

Processing Pipeline

- Integrate Tesseract OCR for text extraction

- Try to support table parsing

- Implement batch processing with asyncio

-

Integration Ideas

- Connect with llama-index for RAG applications

- Build automated workflows

Resources

Thanks for reading! If you have any questions or suggestions, please feel free to leave a comment.